Is your code ready for retirement? Is it suffering from the diseases of old age? Do you have code you can’t even imagine retiring? It’s just too critical? Too pervasive? Too legacy?

Jon & The Widgets

Jon’s first job out of school was in the local widget factory, WidgetCo. Jon was young, enthusiastic and quickly took to the job of making widgets. The company was pleased with Jon and he grew in experience, learning more about making widgets, taking on ever more responsibility; until eventually Jon was responsible for all widget production.

After a couple of years one of WidgetCo’s biggest customers started asking about a new type of square widget. They had only made circular widgets before, but with Jon’s expertise they thought they could take on this new market. The design team got started, working closely with Jon to design a new type of square widget for Jon to produce. It was a great success, WidgetCo were the first to market with a square widget and sales went through the roof.

Unfortunately the new more complex widget production pipeline was putting a lot of pressure on the packing team. With different shape widgets and different options all needing to be sorted and packed properly mistakes were happening and orders being returned. Management needed a solution and turned to Jon. The team realised that if Jon knew a bit more about how orders were going to be packed he could organise the widget production line better to ensure the right number of each shape widget with the right options were being made at the right time. This made the job much easier for the packers and customer satisfaction jumped.

A few years down the line and Jon was now a key part of the company. He was involved in all stages of widget production from the design of new widgets and the tools to manufacture them through to the production and packaging. But one day Jon got sick. He came down with a virus and was out for a couple of days: the company stopped dead. Suddenly management realised how critical Jon was to their operations – without Jon they were literally stuck. Before Jon was even back up to speed management were already making plans for the future.

Shortly after, the sales team had a lead that needed a new hexagonal widget. Management knew this was a golden opportunity to try and remove some of their reliance on Jon. While Jon was involved in the initial design, the team commissioned a new production line and hired some new, inexperienced staff to run it. Unfortunately hexagonal widgets were vastly more complex than the square ones and, without Jon’s experience the new widget production line struggled. Quality was too variable, mistakes were being made and production was much too slow. The team were confident they could get better over time but management were unhappy. Meanwhile, Jon was still churning out his regular widgets, same as he always had.

But the packing team were in trouble again – with two, uncoordinated production lines at times they were inundated with widgets and at other times they were idle. Reluctantly, management agreed that the only solution was for Jon to take responsibility for coordinating both production lines. Their experiment to remove their reliance on Jon had failed.

A few years later still and the new production line had settled down; it never quite reached the fluidity of the original production line but sales were ok. But there was a new widget company offering a new type of octagonal widget. WidgetCo desperately needed to catch up. The design team worked with Jon but, with his workload coordinating two production lines, he was always busy – so the designers were always waiting on Jon for feedback. The truth was: Jon was getting old. His eyes weren’t what they used to be and the arthritis in his fingers made working the prototypes for the incredibly complex new widgets difficult. Delays piled up and management got ever more unhappy.

So what should management do?

Human vs Machine

When we cast a human in the central role in this story it sounds ridiculous. We can’t imagine a company being so reliant on one frail human being that it’s brought to its knees by an illness. But read the story as though Jon is a software system and suddenly it seems totally reasonable. Or if not reasonable, totally familiar. Throughout the software world we see vast edifices of legacy software, kept alive way past their best because nobody has the appetite to replace them. They become too ingrained, too critical: too big to fail.

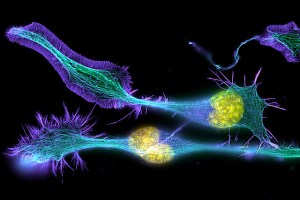

For all it’s artificial construct, software is not so different from a living organism. A large code base will be of a level of complexity comparable with an organism – too complex for any single human being to comprehend in complete detail. There will be outcomes and responses that can’t be explained completely, that require detailed research to understand the pathway that leads from stimulus to response.

For all it’s artificial construct, software is not so different from a living organism. A large code base will be of a level of complexity comparable with an organism – too complex for any single human being to comprehend in complete detail. There will be outcomes and responses that can’t be explained completely, that require detailed research to understand the pathway that leads from stimulus to response.

But yet we treat software like it is immortal. As though once written software will carry on working forever. But the reality is that within a few years software becomes less nimble, harder to change. With the growing weight of various changes of direction and focus software becomes slower and more bloated. Each generation piling on the pounds. Somehow no development team has mastered turning back time and turning a creaking, old age project into the glorious flush of youth where everything is possible and nothing takes any time at all.

It’s time to accept that software needs to be allowed to retire. Look around the code you maintain: what daren’t you retire? That’s where you should start. You should start planning to retire it soon, because if it’s bad now it is only getting worse. As the adage goes: the best time to start fixing this was five years ago; the second best time is now.

After five years all software is a bit creaky; not so quick to change as it once was. After ten years it’s well into legacy; standard approaches have moved on, tools improved, decade old software just feels dated to work with. After twenty years it really should be allowed to retire already.

Software ages badly, so you need to plan for it from the beginning. From day one start thinking about how you’re going to replace this system. A monolith will always be impossible to replace, so constantly think about breaking out separate components that could be retired independently. As soon as code has got bigger than you can throw away, it’s too late. Like a black hole a monolith sucks in functionality, as soon as you’re into run away growth your monolith will consume everything in it’s path. So keep things small and separate.

What about the legacy you have today? Just start. Somewhere. Anywhere. The code isn’t getting any younger.