The pressure to deliver yesterday is strong. If it’s not customers nagging you, it’s project managers breathing down your neck or your own self-doubt that this should have been simpler: the desire to get the task done quicker can often be irresistible. How do you strike the right balance between cutting corners and polishing the turd?

While working through a feature I maintain a “navigator pad” of things I want to come back to. These are refactorings I’ve spotted, tests that need cleaning up, design smells to look at or just plain questions I’m curious to know the answer to (can foo ever actually be null? is this method really used?) This list ebbs and flows as I’m working through a feature: some days I seem to do nothing but add new things to it, other days I manage to cross half the list off as some much-needed refactoring becomes critical to complete the next change. But the one constant throughout a feature is the nav pad.

Recently I was nearing the end of a feature and my nav pad didn’t seem to be getting any shorter. I’d spent a good bit of time refactoring things, but new problems kept appearing – it didn’t seem like I’d ever be “Done”. The feature was way behind schedule, my self-doubt was growing: I’m trying to do a good job, I don’t want this to take any longer but I keep spotting things I got wrong before or simply missed. Suddenly one morning, within the space of a couple of hours, I crossed 20 items off the nav pad, sat back and realised: it’s empty! I was Done.

The next thing that struck me was what a strange occurrence this was: I couldn’t remember the last time I’d actually crossed everything off the nav pad. There would always be some last refactorings on the list that on balance could wait until another time; some tidy up that could wait until another day; some question that I no longer cared to know the answer to. But for the first time in a long time, I’d crossed everything off!

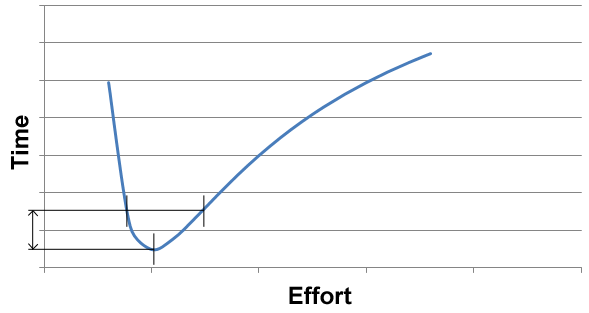

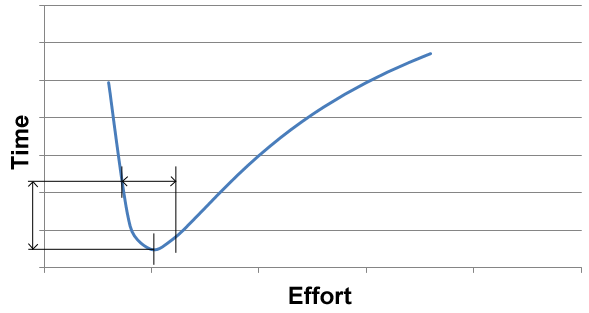

Then the doubt sets in: have I over-engineered this? Could I have been done quicker? The pressure to cut corners is really strong: we’re always pushed to be done faster, to do the absolute minimum we can get away with. Yet I know what needs to be done, I know what the problems are with this code: I’ve written them all down in the nav pad. If I don’t fix them now, then when?

A pattern I see all-too-frequently when I come up against a design smell: I can see the design is wrong, the tests are a mess, the production code is a mess; there’s definitely a better way, I just can’t see it at the minute. I park the refactoring on the nav pad. I come back to it later after ticking off a few more parts of the feature, but I still can’t see a way to resolve the design smell. I spend a couple of hours refactoring back and forth – in the end I declare bankruptcy and raise an issue in the issue tracker. If I’m lucky I’ll pick up the issue again in a couple of months, have a half-hearted look at it but realise I can’t remember what I was really thinking at the time and close the issue. More likely after a few months with nobody picking up the issue I’ll quietly close it. My code guilt has been neatly dealt with. But the crap code still remains.

The pressure to cut corners is incredibly strong, that pressure is strongest when you’re facing a particularly difficult design change. You’ve identified a problem in the design, probably made obvious by other changes you’ve made. You’re struggling to correct it, which means it isn’t easy to resolve; but it’s obviously a problem because you’ve already spent time trying to resolve it. That means the next time you come through here you’re going to spot the same problem and hate the you of today for not fixing it. And yet, this moment right now is the clearest you’ve ever understood the problem. If you give up now, you’ll have to reload into memory all the context you’ve got right now – what makes you think you’ll be in less of a rush in six months time? That you’ll have time to re-learn this code? Time to do what should have been done today?

The pressure to be done yesterday is strong, but today is the best you’ve ever understood this code: so use that understanding to leave it better than you found it. If you’ve removed all the sharp edges you saw on your way through then at least you’re leaving the code better than you found it. Tomorrow when you pass this way, you’ll pass through a little quicker, with fewer sharp edges to distract you. But today? Today you have code gardening to do.